Just over 100 days ago, a team of us gathered at Evans Hall on Berkeley Campus to design a new kind of learning experience - a Generative AI 1-Week Intensive.

The faculty “Dream Team,” as Prof. Xiao-Li Meng called them in the Harvard Data Science Initiative’s press release, had flown in from Boston, Munich, Lausanne, and Vancouver. My team at Next Gen Learning (NGL) was privileged to work alongside them.

This wasn’t a regular course-design workshop.

Our goal was to combine the best of humans and machines for a deeper, faster, and more applied learning experience than today’s online courses can offer. We already knew that pairing live faculty with AI tutor precision and personalization improves engagement and speed to comprehension. Now we had the opportunity to test that theory formally - and at scale - with some of the world’s top academic and industry minds.

Two weeks ago, we presented the course to 130+ participants from around the world. This is a screenshot from our opening lecture showing student locations behind a wall of student emojis during Prof. Meng’s welcome address:

Our AI Campus integrates live video classrooms with instant transcripts so that AI tutors can weave seamlessly in and out of each learner’s journey. After the lecture is finished, AI podcast hosts summarize and relate the lecture to each student’s professional context and learning goals.

The campus is alive in more ways than one. I’ll explain how - and introduce the other generative AI technologies we integrated - but first, the results.

The Results

Net Promoter Score (NPS): +78

50% of total learning time (9 of 18 hours) was spent with Paski, our AI Tutor

Why does this matter?

Because it validates something essential: that a course taught by humans and machines together can achieve high quality, high engagement, and meaningful impact.

It also points, to me, of a future where learners, faculty members, and institutions will work with AI collaborators. This gets me very excited.

Education’s problem today isn’t access — the internet solved that decades ago. The problem is engagement. And generative AI, when used thoughtfully, may be the key to fixing it.

Here’s what participants said:

Over the past six months, and particularly through the intensity in the past 100 days, the NGL team has learned a lot about how to integrate AI into teaching and learning.

This post covers several topics, designed to share our learnings with the hope that it can be used by educators, technologists and leaders who seek to integrate generative AI thoughtfully and responsibly into their work. I have included a range of screencasts to more fully showcase our work and underlying methods.

What This Post Covers

50% of student learning time was spent with Paski, our AI Tutor

Why most AI tutors fail

How we designed Paski

How Paski works technically

Integrating faculty’s Digital Twins

Personalized lecture debriefs

The Personalized Curriculum / Personalized Textbook

Blurring the line between education and consulting

What didn’t work

The student experience - in their words

Personalizing for corporate teams

What’s next

1. 50% of Student Learning Time with Paski

Students spent nine hours in one week learning with Paski - half of their learning time. That’s extraordinary engagement.

We had initially designed the course for 35% of time with AI collaborators. The fact that students exceeded that target suggests that Paski was not just functional - it was compelling. Here is a summary graphic from our course blueprint:

On the final day, one student asked “Can I keep Paski forever?“ That line captures what’s possible when an AI tutor feels integrated, not optional.

And we do think about a future where AI tutors follow learners across programs, building personalized knowledge graphs and helping connect theoretical insights to professional practice over time. But we’re not there yet.

2. Why Most AI Tutors Fail

Most AI tutors fail because they sit on the periphery of the learning experience.

They’re bolted on, not built in.

They lack awareness of the student’s goals, progress, and materials.

They’re under-designed, under-trained, and under-used.

It’s like asking a human tutor to wait quietly in the corner of a classroom, hoping a student might start a deep conversation. No surprise that adoption rates are often under 3%.

By contrast, success comes from designing the AI tutor into the core learning path - as intentionally as a faculty-led session or group discussion.

3. How We Designed Paski

We embedded Paski directly into the student’s core learning sequence - not as a helper, but as an essential participant.

We gave it a voice, and let students respond in theirs. OpenAI’s Realtime API has made this really easy to do technically.

We made voice control student-driven - they decide when to start and stop speaking. That small UX choice made a big difference in comfort and flow.

Result: 89% of Paski interactions were by voice, with the balance via text.

And because it supports 50 languages, it lowers cognitive load for multilingual learners.

Faculty co-design each tutorial to mirror how they’d guide a 1-on-1 discussion with their best teaching assistant. For example:

Day 1 of the Generative AI 1-Week Intensive:

Live lecture by Stephanie Dick on the history of intelligence and AI

1-on-1 tutorial with Paski: “How to assess the intelligence of an AI system”

Group debrief with Stephanie.

This thread - live faculty, AI tutorial, live reflection - defines the heart of our pedagogy: human inspiration, machine precision, integrated design.

Here’s a 4 minute accelerated demo of how students would experience that tutorial with Paski:

Now let’s move to the more technical explanation.

4. How Paski Works Technically

Conceptually, Paski is an AI tutor agent orchestrated through a structured sequence of:

Contextual prompts (grounded in course materials)

Student-profile variables

Tool calls (load documents, whiteboards, slides; get reference materials; retrieve message history; get lecture transcript; call another Agent; et al)

Ongoing dialogue memory

Here is a conceptual diagram of how it all works:

I’d like to share a little more under the hood. So here is a screencast of the Initial Prompt we use for the AI tutorial you saw in the screencast above titled ”How to assess the intelligence of an AI system”. I also expand on how we use a range of merge variables to personalize tutorials, and load artefacts like documents, whiteboards and presentations alongside the tutor so that we can extend AI tutorials into students work on their artefacts, all guided and supported by the AI tutor:

Each tutorial is scaffolded through prompt engineering that personalizes tasks and dynamically loads relevant artefacts - enabling Paski to shift from conversational tutor to quasi-consultant.

In this course, Paski guided students through building their own AI Strategy, based on DAIN Consulting’s 9-part framework (first published in Harvard Data Science Review - and more about the incredible DAIN team later). The result: participants left with a work-ready strategy document that applied the course frameworks directly to their professional context.

5. Integrating Faculty Digital Twins

Students begin their journey with a personalized video message from Prof. Xiao-Li Meng’s avatar - his “digital twin.” The twin then hands over to Paski for an onboarding conversation, where it gets a deeper view of the participants’ work history, interests and learning goal. and later re-appears to post personalized reflections and questions in the participants social feed.

This screencast shows all of the above in action on our AI Campus:

This demonstrates the capabilities of digital faculty avatars. While we see clear value in contextual explainers and onboarding sequences, we’re still exploring their broader pedagogical potential beyond novelty.

6. Personalized Lecture Debriefs

One of my favourite innovations: personalized audio debriefs for live lectures. This activity uses NotebookLM’s Audio Overview feature, which I covered extensively in NGL’s launch post.

Using lecture transcripts, slides, and each student’s professional profile, our system generates a 10-minute podcast-style reflection linking key insights from the lecture to the student’s own work context. It’s like having two expert hosts sit down the next morning to discuss what you learned yesterday and prepare you for the day ahead. These were released the morning after each lecture to support spaced reflection and application.

Here is a screencast of this personalized lecture debrief in action:

Reach out if you’d like to see the custom prompt and data sources we use to create this personalized podcast audio.

This personalized lecture debrief, together with personalized welcome videos from faculty avatars, forms part of what we’re referring to as a personalized curriculum.

7. The Personalized Curriculum / Personalized Textbook

Personalization in education isn’t about endless customization. It’s about targeted relevance.

We personalize 5–10% of an otherwise stable core curriculum.

Generative AI allows this curriculum to adapt dynamically to a student’s role, goals, and professional context. Google’s Learn Your Way inspired this approach: start with shared material, personalize where it counts.

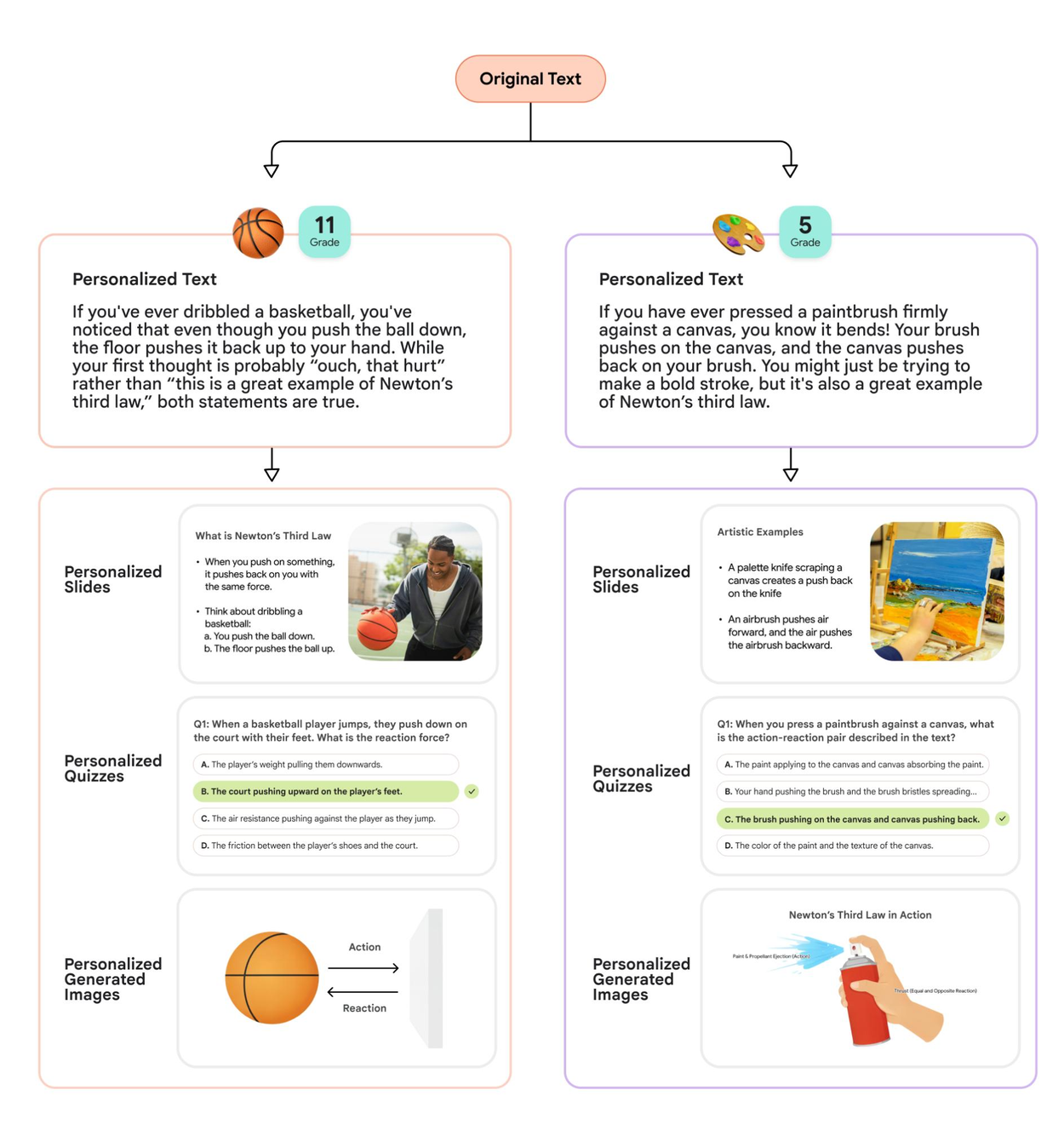

I like Learn Your Way’s simple approach to the personalization of a textbook:

Start with a standard body of text

Ask students which grade they are in, and let them choose an interest (specific sports, music, film, etc…)

Personalize the text to that students context based on their grade level and area of interest.

The below illustration shows how text describing Newton’s Third law is personalized for two different learner profiles:

We are now personalizing foundational text and articles as part of the student experience, which keeps 90% of the original article intact, and adds examples and questions that are personalized to (1) the student’s professional context - their role, work history, industry, etc… - and (2) their learning goals.

Here is a screenshot of the start of a foundational article that describes a framework for implementing Agentic workflows in an organization, which has has been personalized to our Head of Education’s (Ester van der Walt) context.

The personalized reading begins with the article with the same text included in the original article:

And then it threads in a personalized section titled “Consider this in your context, Ester“. The system understands Ester’s professional context and learning goals, which means it can include highly relevant sections as you see below:

Any level of personalization is possible. And we are continuing to research exactly how to personalize readings like this for maximum engagement and improved speed to comprehension.

8. Blurring the Line Between Education and Consulting

Teaching and consulting have always overlapped. What’s new is how AI can bridge them.

In this intensive course, Paski acted as both tutor and consultant, helping students design actionable AI strategies within their organizations - guided by faculty and supported by DAIN’s proven frameworks.

This is where our work with Dirk Hofmann, Ulla Kruhse-Lehtonen and their amazing team of Principals at DAIN Studios really shone. Dirk and Ulla were one of the first to write about Gen AI Strategy back in 2020, publishing their article “How to Define and Execute Your Data and AI Strategy” in the Harvard Data Science Review. This article underpins the entire course, providing us with a rich framework to both teach and apply.

This ability to apply learning in context meaningfully increases the ROI of education for working professionals.

Here is a screencast of Paski in action as a copilot to students in building their AI strategy documents, together with an overview of the prompting and tool calling that makes this feature possible:

The working professional market is agreeing with us on the ROI of these programs. 100s of students signed up for programs with these features over the past three weeks.

More on student feedback in section 10 below. But first:

9. What didn’t work well?

As with all endeavours, several things didn’t work well in our 1-week Intensive presentation.

Our native transcripts, created by Generative AI, weren’t accurate enough. We caught this at the end of the first lecture and switched to using Descript for all lecture transcription. Descript is excellent!

Our group work didn’t work well. This failure didn’t strictly occur due to any generative AI integrations. But for completeness, this is worth mentioning. This was also totally our (my) fault. Our first group work session, comprising micro cohorts of 5 participants, was a poor learning experience. We quickly realised this was mostly because the groups weren’t facilitated. So we added facilitators to day 2’s group work and this corrected some of the issues. We think that the balance of the issues were due to suboptimal group composition and some technical issues with facilitating live, web-based video rooms. We have a new design for group work - using facilitators, Miro-style boards and a tightly structured group-work agenda that we believe will help us add successful group work to our upcoming courses.

10. The Student Experience

Students described the week as “transformative,” “intense,” and “immediately applicable.”

Several shared reflections on LinkedIn - from Frankfurt to Phoenix to California - showcasing the global reach and professional diversity of our cohort.

Ozren Winkler, M.Sc. Finance, CIA, Senior Manager Internal Audit EMEA at Mitsubishi Chemical from Frunkfurt wrote about his AI learning journey here. Marcial Hernandez-Manzano from California wrote “I just completed a one-week 𝐇𝐃𝐒𝐈 – 𝐀𝐈 𝐒𝐭𝐫𝐚𝐭𝐞𝐠𝐲 𝐏𝐫𝐨𝐠𝐫𝐚𝐦, and it completely shifted my perspective about…". Kaylen Johnson, from the Greater Phoenix Area, wrote about live lectures, collaborative lab sessions, and 1-on-1 tutoring with the AI tutor Paski in her post here.

11. Personalizing for Corporate Teams

We’ve also experimented in working with leadership teams - in one course including a group of 30 people from the same company - customizing their learning paths to align with their CEO’s specific AI agenda. Each individual left with an AI strategy document and high-value use cases for their role and function, and the CEO received a post-course report describing how their teams’ work helps refine their AI policy.

This model offers enterprise-level value at a fraction of what it would usually cost to deliver that value.

12. What’s Next

We’re designing a course to help educators integrate AI into online education - combining practical, ethical, and pedagogical dimensions.

If you’d like early access, leave your details and we’ll contact you first:

We’re building in the open - sharing our work, learning from others, and advancing the responsible use of generative AI in education. If you’re building the future of learning, I’d love to hear what’s working for you, and what isn’t.

Gratitude

This has been the fastest venture I’ve ever built - thanks to the force-multiplying power of AI and the extraordinary dedication of the NGL team and our collaborators. As Hagen Rode and I first set out to build NGL, we knew that this time would be different - faster for sure. It is also turning out to be such a rich experience underpinned by inspiring collaborations.

Major thanks to our entire team for their tireless work in bringing all of this to life.

Adrian Visagie, Andre Grobler, Bronwen Henshilwood, Claire du Preez, Clara Soldani, Damian Fisher, Ester Van der Walt, Hagen Rode, Jacques Fourie, Jannah Ruthven, Paski and our other AI Agents, Robyn Costa, Rodney du Preez, Sammy-Jane Every, Stella Pickard, Vionne Schmidt. Special thanks to Graham, Mandy and Jen Paddock when we first trialled our work. And to Samer Salty and Rob Paddock for their ongoing support.

This work was made possible through close collaboration with incredible faculty: Prof. Xiao-Li Meng (Harvard), Prof. Ani Adhikari (Berkeley), Stephanie Dick (SFU), Dirk Hofmann (DAIN Germany), Ulla Kruhse-Lehtonen (DAIN Finland) and Vinitra Swamy (Scholé).

The DAIN team of Principals include Hanna Gronqvist, Ivana Ovcaric, Gyorgy Paizs and Dustin Schwarz and we couldn’t have done this work without their contributions. The team of Berkeley Data Science TAs brought our Live Labs to life, led by Vinitra Swamy of Scholé, and included Angela Guan (Microsoft), Anna Nguyen (Stanford), Jacob Warnagieris (Amazon), Kevin Miao (Apple), Maya Shen (CMU), Ryan Roggenkemper (Waymo), Tam Vilaythong (Google) and Will Furtado (Citadel).

The future of learning is very exciting. I can’t wait to share more of our progress soon.

Onwards,

Sam and the NGL Team